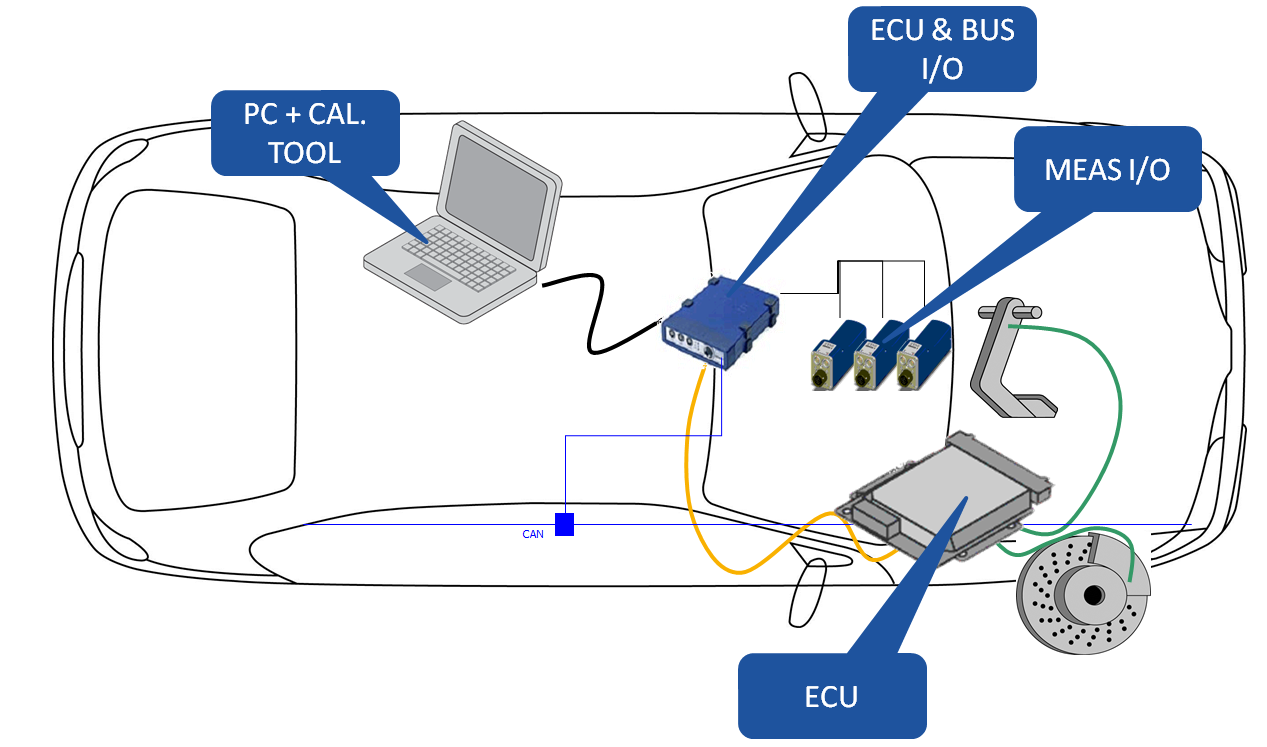

The ECU (Electronic Control Unit) used in development process is not the same as a production ECU. It is specially equipped with a suitable interface for calibration which you will never see on a production ECU!

There are a couple of technologies available to 'talk' to the ECU, one is a simple CAN based interface - with a calibration interface driver software installed (known as CCP - CAN Calibration Protocol) – in this case, the ECU needs extra memory (compared to standard) to facilitate the on line handling of the measurement labels. Another option is to equip the ECU with an 'emulator'. This device is installed inside the ECU and has direct read-write access to the data bus inside the micro controller. It also has additional memory and processing capability in order to directly handle communication with the PC running the calibration tool software. Generally speaking, the emulator has superior performance to the CAN solution, but is more complex and costly to implement.

Fig 1 – ECU emulators (also known as ETK) can be parallel (i.e. directly connected to the data bus) or serial (i.e. directly connected to the microcontroller) (source: ETAS)

Once you have the physical connection to the ECU, then you need to understand what is going on inside, and be able to make some sense of it - also, you need to be able to make changes to parameters and store, or apply them. To facilitate this, you need 2 pieces of information, the actual calibration of the ECU, which is stored in a HEX file and information about how to read the HEX file, this is the A2L file.

The HEX file is a binary file, so you need some additional information (from the A2L file) to know which part of the memory inside the microcontroller, is used for what values, and how do we convert the 1s and 0s into something meaningful – a physical value – for example, engine speed. These A2L and HEX files are standardised, and are delivered with every development ECU to allow the Calibration Engineer to access and calibrate the ECU.

Fig 2 – Once you have access to the ECU, you need to understand what’s going on inside – the a2l and hex file provide this! (source: ETAS)

The task, measuring and calibrating

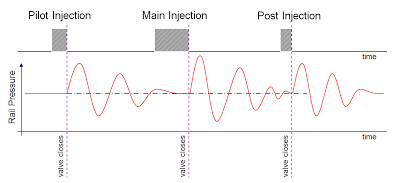

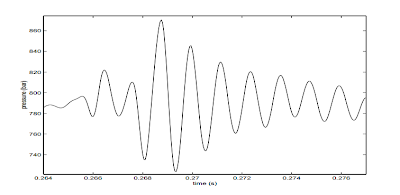

So now we know the hardware and software involved, what does the calibration task actually mean, and how is it done? With the above mentioned set up, we can access the ECU during run-time, and make changes, for example, changing ignition timing to give the best performance at any given engine operating condition (speed, load). The ignition timing is held in a ‘map’ – the map covers all engine operating conditions and provides an ignition timing demand value as a set point. Using the calibration tool, the map can be adjusted during testing, optimising to give the best performance. Note that a map is considered as a single value (or label) to be calibrated.

Fig 3 – Calibration is round trip Engineering, making adjustments, measuring, analysing and then adjusting again (source: ETAS)

The calibration Engineer, using the calibration tool, has access to all the labels during testing, so he can adjust the calibration labels, and see responses by monitoring the measurement labels. In addition, the engineer may want to measure some additional information. So it is often the case that the calibration tool is used in conjunction with some measurement hardware, so that physical values can be measured with additional sensors, for example – exhaust temperature may be measured using installed sensors on a development vehicle, in order to calibrate the exhaust temperature model inside the ECU, which is used on the production ECU for component protection.

Fig 4 – Screenshot of the calibration tool, showing maps and curves (calibration labels) that need to be adjusted and optimised (source: ATI)

Fig 5 – Overview of a typical vehicle measurement set-up for calibrating the ECU (Source: ETAS)

During the development cycle, the Calibration Engineer will adjust and change many values inside the ECU in order to optimise and characterise the engine. In a modern powertrain, this can take teams of people months, or even years to complete. Consider an ECU, with 30,000 labels, which will be fitted to 10 variants of vehicles. Each vehicle has a different calibration in order to differentiate it in the market. Each vehicle has to be calibrated with respect to emissions, performance, fuel consumption, drivability and on-board diagnostics – each one of these tasks is considerable, and they all impact on each other. It is very typical that calibration of a single ECU variant is managed by large teams of Engineers, often with specialist knowledge of how a function works, and how to calibrate it. For example, there may be a team of Engineers calibrating emissions, which will include a specialist person or team who can deal with the start/stop system, or the after-treatment system. This complex environment creates masses of data (calibration and measurement) that needs to be handled, analysed, controlled and merged, in order to create the final ‘master’ calibration that will be signed off by the Chief Calibration Engineer. This is the final version that will then be deployed on the production vehicle. This final calibration is normally ‘flashed’ into the ECU during the vehicle production cycle. Prior to the final vehicle test, in the factory before shipping of the vehicle.

The future for ECU development

It recognised in Industry that the calibration task and associated software development for controllers is becoming the majority task in the development of a modern vehicle or powertrain. This trend is unlikely to reverse is becoming impossible to manage efficiently with traditional technical approaches. To deal with this, new methods for the task are being developed, optimised and deployed. A popular approach is model-based engineering. This means reducing the amount of testing, by making some strategic measurements, then using a mathematical model to fit to the measurements, and provide accurate prediction in the areas where no measurement was made. For example, if we take a simple map, which is 8 by 8 is size, this means 8 x 8 data point = 64. So, in order to populate this map we would need 64 measurements! However, it may be possible to make 20 strategic measurements, then fit model to this data, then make 12 measurements to validate the model (=32) and this would reduce the number of measurements by half. The key here is to define the measurement strategy effectively to be able to fit a model accurately. This needs an approach called design-of-experiment (DOE).

Fig 6 – The more parameters to be adjusted, the more work to be done, with current systems, the complexity is such that with a traditional approach, it would take years to calibrate an ECU!

Another fast moving trend to accelerate the development of ECU is the concept of ‘frontloading’ – this means, moving specific aspects or tasks earlier in the cycle, where they can be performed in a lower cost environment. For example, if a vehicle manufacturer did all their development with prototype vehicles, then the cost would be massive as many, many vehicles would be needed. So, to save time and money, if some of the tasks can be done in a test facility, then this is generally cheaper because the facility can be adapted and re-used again and again. A good example here is the engine, or transmission, a large amount of development can be done on a test bed, with just final adjustments and refinements made in a vehicle test.

With current technology developments, this has moved forward a step - much development work can now be done on a PC, with a simulation environment – and this very applicable to ECU development work. ECU software and functions can be developed and tested easily in virtual environments. A full ECU, with a vehicle, driver and environment can be simulated on a PC, and the simulation can be run faster than real-time, this means a 20 minute test run can be reduced to a few seconds (depending on the complexity and PC processing power) – providing simulated results for analysis and development. A typical next step would be to have the ECU itself in a test environment – thus being able to test the actual ECU code, running on the ECU hardware, with physical connections to electrical stimulation, but a complete virtual test environment (driver, vehicle, environment). This approach is known as HiL testing – Hardware-in-the-Loop!

Fig 7 – Signal flows in a real systems, compared to HiL simulation (source:dSPACE)

Fig 8 - Typical development paths and tasks for ECU development (Source: ETAS)

There is no doubt that developing and calibrating an ECU is a complex task! Many tools and technologies are available to help, and many more will need to be developed to keep up with the demand for more sophistication!